泰塔尼克号生存预测

在数据挖掘比赛中,模型往往不是最重要的,建模之前的数据处理才是决定成绩的关键

那就开始学习数据预处理,作为小白的话就从最基础的这个项目学起吧

开整!!

数据分析及处理

import pandas as pd

train_df = pd.read_csv('./titanic/train.csv')

test_df = pd.read_csv('./titanic/test.csv')

combine = [train_df, test_df]

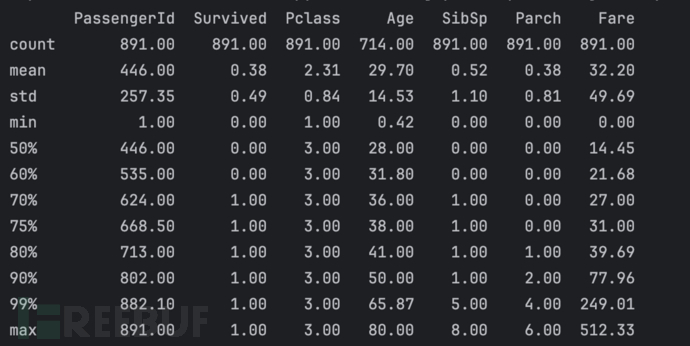

print(round(train_df.describe(percentiles=[.5, .6, .7, .75, .8, .9, .99]),2))

首先我们看看数据大概特征

可以看到Age有缺省值

最终存活率有38%

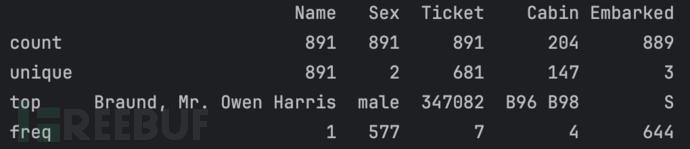

使用train_df.describe(include=['O'])可以看到

大多数乘客是从S港口进入的,大部分为男性

接下来看看特征与幸存Survived的相关性如何

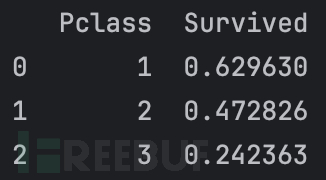

Pclass与Survived

train_df[['Pclass','Survived']].groupby(['Pclass'], as_index=False).mean().sort_values(by='Survived', ascending=False)

可以看到船舱等级越高生存下来的几率越大,说明Pclass是重要的特征

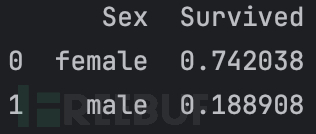

Sex与Survived

train_df[['Sex','Survived']].groupby(['Sex'], as_index=False).mean().sort_values(by='Survived', ascending=False)

可以看到女性生存下来的几率很高,说明Sex是重要特征

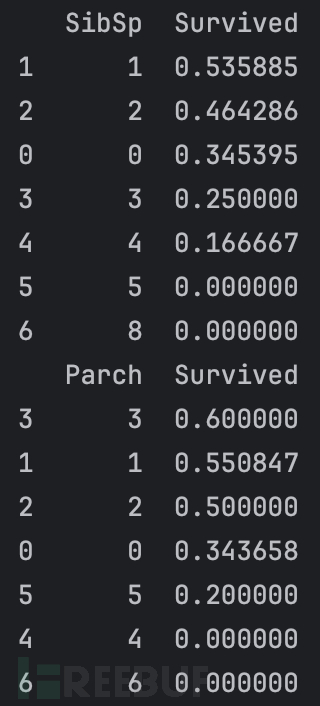

SibSp,Parch与Survived

train_df[['SibSp','Survived']].groupby(['SibSp'], as_index=False).mean().sort_values(by='Survived',ascending=False)

train_df[['Parch','Survived']].groupby(['Parch'], as_index=False).mean().sort_values(by='Survived',ascending=False)

这两个特征中有一些和Survied关系度为0

Ticket,Cabin与Survived

Ticket 票号包含较高重复率(22%),且与生存率没有太多关系,可以删掉

Cabin含有太多缺失值,没有参考价值,删掉

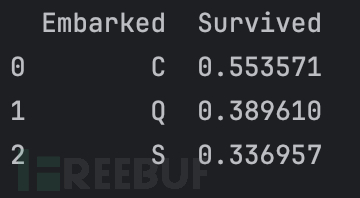

Embarked与Survived

train_df[['Parch','Survived']].groupby(['Parch'], as_index=False).mean().sort_values(by='Survived',ascending=False)

Embarked,从最开始就分析到,从不同港口等船到人生存率不同,要作为重要特征

从现有特征中提取新特征

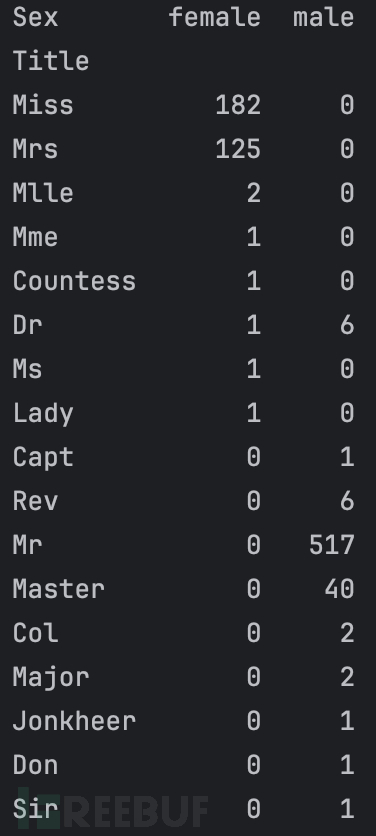

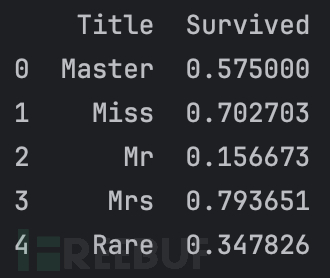

看到表中每个人名中都存在称呼,如Miss,mir等等,猜测与生存率存在一定关系

称呼

那就把它对应提取出来

# 使用正则表达式提取Title特征

for dataset in combine:

dataset['Title'] = dataset.Name.str.extract('([A-Za-z]+)\.', expand=False)

pd.crosstab(train_df['Title'], train_df['Sex']).sort_values(by='female', ascending=False)

combine是将训练集和测试集的总和

可以看到称呼的大致分布,但有些称呼太少啦,不利于等会儿做分类

将稀少的称呼合并成一个

for dataset in combine:

dataset['Title'] = dataset['Title'].replace(['Lady', 'Countess','Capt', 'Col',

'Don', 'Dr', 'Major', 'Rev', 'Sir', 'Jonkheer', 'Dona'], 'Rare')

dataset['Title'] = dataset['Title'].replace(['Mlle', 'Ms'], 'Miss')

dataset['Title'] = dataset['Title'].replace('Mme', 'Mrs')

print(train_df[['Title', 'Survived']].groupby(['Title'], as_index=False).mean())

这里有个注意的是,这些值都是字符串,不利于训练模型,那就改成数值

title_mapping = {"Mr": 1, "Miss": 2, "Mrs": 3, "Master": 4, "Rare": 5}

for dataset in combine:

dataset['Title'] = dataset['Title'].map(title_mapping)

dataset['Title'] = dataset['Title'].fillna(0)

既然做到这里了,那就顺便把性别也改一下

for dataset in combine:

dataset['Sex'] = dataset['Sex'].map( {'female': 1, 'male': 0} ).astype(int)

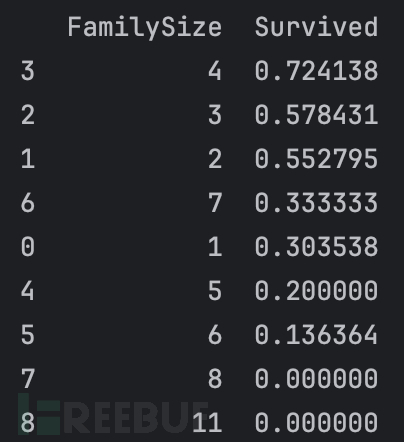

家庭

这是一个没想到的创新点

结合SibSp和Parch特征创建一个新特征FamilySize,意为包括兄弟姐妹、配偶、父母、孩子和自己的所有家人数量

for dataset in combine:

dataset['FamilySize'] = dataset['SibSp'] + dataset['Parch'] + 1

train_df[['FamilySize', 'Survived']].groupby(['FamilySize'], as_index=False).mean().sort_values(by='Survived', ascending=False)

做合并操作的同时看看其与Survived的关系

可以看到,这是一个重要特征

这里解释一下,为什么+1,因为整个家庭包括自己

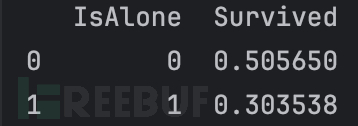

新特征IsAlone

新特征IsAlone,取值为0表示不是独自一人,取值为1表示独自一人

这也是想不到的点

for dataset in combine:

dataset['IsAlone'] = 0

dataset.loc[dataset['FamilySize'] == 1, 'IsAlone'] = 1

train_df[['IsAlone', 'Survived']].groupby(['IsAlone'], as_index=False).mean()

这里大佬将IsAlone替代了家庭这个特征,不知道为啥这样

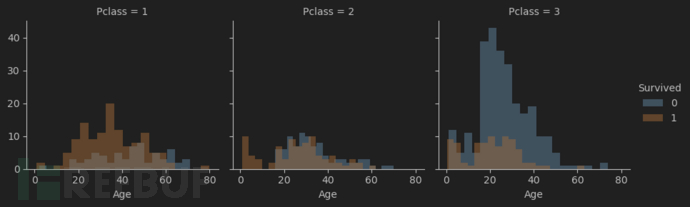

新特征Age*Pclass

这个是让我最理解不了的

创建一个新特征Age*Pclass,以此来结合Age和Pclass变量

我试着画了一下它们的关系图

age和pclass的组合会和生存率有一定相关性

所以直接将两者想乘来体现这个相关性

优化特征数据

分箱处理一般在建立分类模型时,需要对连续变量离散化,特征离散化后,模型会更稳定,降低了模型过拟合的风险

OK,新学到一个防止过拟合的方法

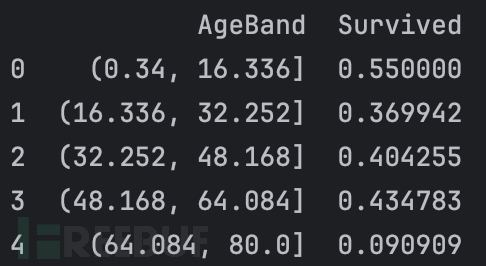

Age

train_df['AgeBand'] = pd.cut(train_df['Age'], 5) # 将年龄分割为5段,等距分箱

train_df[['AgeBand', 'Survived']].groupby(['AgeBand'], as_index=False).mean().sort_values(by='AgeBand', ascending=True)

分箱处理的同时看看不同年龄段的生存率

AgeBand是一个范围,还是要转换成数值

for dataset in combine:

dataset.loc[ dataset['Age'] <= 16, 'Age'] = 0

dataset.loc[(dataset['Age'] > 16) & (dataset['Age'] <= 32), 'Age'] = 1

dataset.loc[(dataset['Age'] > 32) & (dataset['Age'] <= 48), 'Age'] = 2

dataset.loc[(dataset['Age'] > 48) & (dataset['Age'] <= 64), 'Age'] = 3

dataset.loc[ dataset['Age'] > 64, 'Age'] = 4

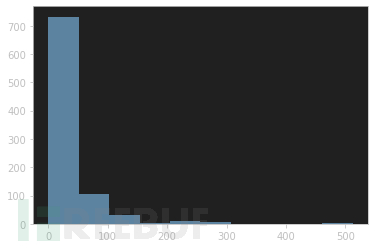

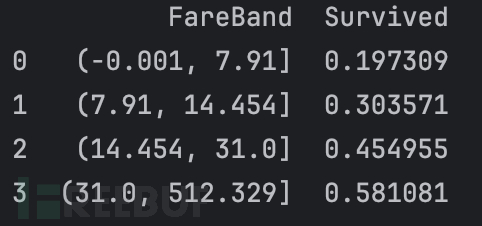

Fare

注意到,Fare也要做分箱处理

使用plt.hist(train_df['Fare'])先看看其大致分布

train_df['FareBand'] = pd.qcut(train_df['Fare'], 4) # 根据样本分位数进行分箱,等频分箱

train_df[['FareBand', 'Survived']].groupby(['FareBand'], as_index=False).mean().sort_values(by='FareBand', ascending=True)

qcut这个函数要提一下:qcut()是 Pandas 提供的一个函数,用于根据样本的分位数将数据进行等频分箱(即每个箱子的样本数大致相同)。根据中位数来的

四分位数(Quartiles)

四分位数是将数据分成四个相等部分的三个数值(即切分点)。

第一四分位数(Q1):数据中位数以下的中位数,表示数据的第 25%。

第二四分位数(Q2):数据的中位数,表示数据的第 50%。

第三四分位数(Q3):数据中位数以上的中位数,表示数据的第 75%。

四分位距(IQR):第三四分位数和第一四分位数的差值,即 IQR = Q3 - Q1,用于衡量数据的离散程度。

同样的需要将这个范围改为int值

for dataset in combine:

dataset.loc[ dataset['Fare'] <= 7.91, 'Fare'] = 0

dataset.loc[(dataset['Fare'] > 7.91) & (dataset['Fare'] <= 14.454), 'Fare'] = 1

dataset.loc[(dataset['Fare'] > 14.454) & (dataset['Fare'] <= 31), 'Fare'] = 2

dataset.loc[ dataset['Fare'] > 31, 'Fare'] = 3

dataset['Fare'] = dataset['Fare'].astype(int)

填补缺省值

登船港口特征Embarked,有三种可能取值 S、Q、C

最开始的分析中看到,其是存在缺省的,缺得也不多,三个,面对这种情况采用采用众数填补缺失值

freq_port = train_df.Embarked.dropna().mode()[0] #计算众数

for dataset in combine:

dataset['Embarked'] = dataset['Embarked'].fillna(freq_port)

for dataset in combine:

dataset['Embarked'] = dataset['Embarked'].map( {'S': 0, 'C': 1, 'Q': 2} ).astype(int)

同样要转换成数值

测试集中Fare也存在一个缺省值,用中位数填充

test_df['Fare'].fillna(test_df['Fare'].dropna().median(), inplace=True)

最后处理得到的两张表如

| Survived | Pclass | Sex | Age | Fare | Embarked | Title | IsAlone | Age*Pclass | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 3 | 0 | 1 | 0 | 0 | 1 | 0 | 3 |

| 1 | 1 | 1 | 1 | 2 | 3 | 1 | 3 | 0 | 2 |

| 2 | 1 | 3 | 1 | 1 | 1 | 0 | 2 | 1 | 3 |

| 3 | 1 | 1 | 1 | 2 | 3 | 0 | 3 | 0 | 2 |

| 4 | 0 | 3 | 0 | 2 | 1 | 0 | 1 | 1 | 6 |

| 5 | 0 | 3 | 0 | 1 | 1 | 2 | 1 | 1 | 3 |

| 6 | 0 | 1 | 0 | 3 | 3 | 0 | 1 | 1 | 3 |

| 7 | 0 | 3 | 0 | 0 | 2 | 0 | 4 | 0 | 0 |

| 8 | 1 | 3 | 1 | 1 | 1 | 0 | 3 | 0 | 3 |

| 9 | 1 | 2 | 1 | 0 | 2 | 1 | 3 | 0 | 0 |

| PassengerId | Pclass | Sex | Age | Fare | Embarked | Title | IsAlone | Age*Pclass | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 892 | 3 | 0 | 2 | 0 | 2 | 1 | 1 | 6 |

| 1 | 893 | 3 | 1 | 2 | 0 | 0 | 3 | 0 | 6 |

| 2 | 894 | 2 | 0 | 3 | 1 | 2 | 1 | 1 | 6 |

| 3 | 895 | 3 | 0 | 1 | 1 | 0 | 1 | 1 | 3 |

| 4 | 896 | 3 | 1 | 1 | 1 | 0 | 3 | 0 | 3 |

| 5 | 897 | 3 | 0 | 0 | 1 | 0 | 1 | 1 | 0 |

| 6 | 898 | 3 | 1 | 1 | 0 | 2 | 2 | 1 | 3 |

| 7 | 899 | 2 | 0 | 1 | 2 | 0 | 1 | 0 | 2 |

| 8 | 900 | 3 | 1 | 1 | 0 | 1 | 3 | 1 | 3 |

| 9 | 901 | 3 | 0 | 1 | 2 | 0 | 1 | 0 | 3 |

构建模型并预测结果

终于把最头大的数据处理搞定了,接下来就是套模型了

模型学了就用,我把学过的都试一下

数据处理代码

先把前面数据处理的完整代码给出来

import warnings

warnings.filterwarnings("ignore") #忽略警告信息

# 数据处理清洗包

import pandas as pd

import numpy as np

import random as rnd

# 可视化包

import seaborn as sns

import matplotlib.pyplot as plt

# 机器学习算法相关包

from sklearn.linear_model import LogisticRegression, Perceptron, SGDClassifier

from sklearn.svm import SVC, LinearSVC

from sklearn.neighbors import KNeighborsClassifier

from sklearn.naive_bayes import GaussianNB

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import RandomForestClassifier

# 设置显示选项

pd.set_option('display.max_rows', None) # 显示所有行

pd.set_option('display.max_columns', None) # 显示所有列

pd.set_option('display.width', None) # 自动调整宽度,防止内容被截断

pd.set_option('display.max_colwidth', None) # 显示完整列内容

train_df = pd.read_csv('./titanic/train.csv')

test_df = pd.read_csv('./titanic/test.csv')

train_df = train_df.drop(['Ticket', 'Cabin'], axis=1)

test_df = test_df.drop(['Ticket', 'Cabin'], axis=1)

combine = [train_df, test_df]

print(train_df.head())

for dataset in combine:

dataset['Title'] = dataset.Name.str.extract('([A-Za-z]+)\.', expand=False)

for dataset in combine:

dataset['Title'] = dataset['Title'].replace(['Lady', 'Countess','Capt', 'Col',

'Don', 'Dr', 'Major', 'Rev', 'Sir', 'Jonkheer', 'Dona'], 'Rare')

dataset['Title'] = dataset['Title'].replace(['Mlle', 'Ms'], 'Miss')

dataset['Title'] = dataset['Title'].replace('Mme', 'Mrs')

title_mapping = {"Mr": 1, "Miss": 2, "Mrs": 3, "Master": 4, "Rare": 5}

for dataset in combine:

dataset['Title'] = dataset['Title'].map(title_mapping)

dataset['Title'] = dataset['Title'].fillna(0)

train_df = train_df.drop(['Name', 'PassengerId'], axis=1)

test_df = test_df.drop(['Name'], axis=1)

combine = [train_df, test_df]

for dataset in combine:

dataset['Sex'] = dataset['Sex'].map( {'female': 1, 'male': 0} ).astype(int)

train_df['AgeBand'] = pd.cut(train_df['Age'], 5)#这部是为了找出划分线,将年龄分为5个区间

for dataset in combine:

dataset.loc[ dataset['Age'] <= 16, 'Age'] = 0

dataset.loc[(dataset['Age'] > 16) & (dataset['Age'] <= 32), 'Age'] = 1

dataset.loc[(dataset['Age'] > 32) & (dataset['Age'] <= 48), 'Age'] = 2

dataset.loc[(dataset['Age'] > 48) & (dataset['Age'] <= 64), 'Age'] = 3

dataset.loc[ dataset['Age'] > 64, 'Age'] = 4

dataset["Age"] = dataset["Age"].fillna(dataset["Age"].median())

train_df = train_df.drop(['AgeBand'], axis=1) # 删除刚刚训练集中为了找划分线而加入的AgeBand特征

combine = [train_df, test_df]

for dataset in combine:

dataset['FamilySize'] = dataset['SibSp'] + dataset['Parch'] + 1

for dataset in combine:

dataset['IsAlone'] = 0

dataset.loc[dataset['FamilySize'] == 1, 'IsAlone'] = 1

train_df = train_df.drop(['Parch', 'SibSp', 'FamilySize'], axis=1)

test_df = test_df.drop(['Parch', 'SibSp', 'FamilySize'], axis=1)

combine = [train_df, test_df]

for dataset in combine:

dataset['Age*Pclass'] = dataset.Age * dataset.Pclass

freq_port = train_df.Embarked.dropna().mode()[0]

for dataset in combine:

dataset['Embarked'] = dataset['Embarked'].fillna(freq_port)

for dataset in combine:

dataset['Embarked'] = dataset['Embarked'].map( {'S': 0, 'C': 1, 'Q': 2} ).astype(int)

# 测试集中Fare有一个缺失值,用中位数进行填补

test_df['Fare'].fillna(test_df['Fare'].dropna().median(), inplace=True)

train_df['FareBand'] = pd.qcut(train_df['Fare'], 4) # 根据样本分位数进行分箱,等频分箱,这步操作同上是为了找出划分线

for dataset in combine:

dataset.loc[ dataset['Fare'] <= 7.91, 'Fare'] = 0

dataset.loc[(dataset['Fare'] > 7.91) & (dataset['Fare'] <= 14.454), 'Fare'] = 1

dataset.loc[(dataset['Fare'] > 14.454) & (dataset['Fare'] <= 31), 'Fare'] = 2

dataset.loc[ dataset['Fare'] > 31, 'Fare'] = 3

dataset['Fare'] = dataset['Fare'].astype(int)

train_df = train_df.drop(['FareBand'], axis=1)

combine = [train_df, test_df]

print(train_df.head(10))

print(test_df.head(10))

模型选择

先给出测试的代码吧,累了,这次主要着重于数据预处理,调参数就不细细弄了,就试了几种模型,最好的是随机森林

用了逻辑回归模型,支持向量机模型, KNN,朴素贝叶斯分类器,感知机,线性SVC,决策树,随机森林

import warnings

warnings.filterwarnings("ignore") #忽略警告信息

# 数据处理清洗包

import pandas as pd

import numpy as np

import random as rnd

# 可视化包

import seaborn as sns

import matplotlib.pyplot as plt

# 机器学习算法相关包

from sklearn.linear_model import LogisticRegression, Perceptron, SGDClassifier

from sklearn.svm import SVC, LinearSVC

from sklearn.neighbors import KNeighborsClassifier

from sklearn.naive_bayes import GaussianNB

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import RandomForestClassifier

# 设置显示选项

pd.set_option('display.max_rows', None) # 显示所有行

pd.set_option('display.max_columns', None) # 显示所有列

pd.set_option('display.width', None) # 自动调整宽度,防止内容被截断

pd.set_option('display.max_colwidth', None) # 显示完整列内容

# 数据预处理

train_df = pd.read_csv('./titanic/train.csv')

test_df = pd.read_csv('./titanic/test.csv')

train_df = train_df.drop(['Ticket', 'Cabin'], axis=1)

test_df = test_df.drop(['Ticket', 'Cabin'], axis=1)

combine = [train_df, test_df]

for dataset in combine:

dataset['Title'] = dataset.Name.str.extract('([A-Za-z]+)\.', expand=False)

for dataset in combine:

dataset['Title'] = dataset['Title'].replace(['Lady', 'Countess','Capt', 'Col',

'Don', 'Dr', 'Major', 'Rev', 'Sir', 'Jonkheer', 'Dona'], 'Rare')

dataset['Title'] = dataset['Title'].replace(['Mlle', 'Ms'], 'Miss')

dataset['Title'] = dataset['Title'].replace('Mme', 'Mrs')

title_mapping = {"Mr": 1, "Miss": 2, "Mrs": 3, "Master": 4, "Rare": 5}

for dataset in combine:

dataset['Title'] = dataset['Title'].map(title_mapping)

dataset['Title'] = dataset['Title'].fillna(0)

train_df = train_df.drop(['Name', 'PassengerId'], axis=1)

test_df = test_df.drop(['Name'], axis=1)

combine = [train_df, test_df]

for dataset in combine:

dataset['Sex'] = dataset['Sex'].map( {'female': 1, 'male': 0} ).astype(int)

train_df['AgeBand'] = pd.cut(train_df['Age'], 5)#这部是为了找出划分线,将年龄分为5个区间

for dataset in combine:

dataset.loc[ dataset['Age'] <= 16, 'Age'] = 0

dataset.loc[(dataset['Age'] > 16) & (dataset['Age'] <= 32), 'Age'] = 1

dataset.loc[(dataset['Age'] > 32) & (dataset['Age'] <= 48), 'Age'] = 2

dataset.loc[(dataset['Age'] > 48) & (dataset['Age'] <= 64), 'Age'] = 3

dataset.loc[ dataset['Age'] > 64, 'Age'] = 4

dataset["Age"] = dataset["Age"].fillna(dataset["Age"].median())

print(test_df.head(10))

train_df = train_df.drop(['AgeBand'], axis=1) # 删除刚刚训练集中为了找划分线而加入的AgeBand特征

combine = [train_df, test_df]

for dataset in combine:

dataset['FamilySize'] = dataset['SibSp'] + dataset['Parch'] + 1

for dataset in combine:

dataset['IsAlone'] = 0

dataset.loc[dataset['FamilySize'] == 1, 'IsAlone'] = 1

train_df = train_df.drop(['Parch', 'SibSp', 'FamilySize'], axis=1)

test_df = test_df.drop(['Parch', 'SibSp', 'FamilySize'], axis=1)

combine = [train_df, test_df]

for dataset in combine:

dataset['Age*Pclass'] = dataset.Age * dataset.Pclass

freq_port = train_df.Embarked.dropna().mode()[0]

for dataset in combine:

dataset['Embarked'] = dataset['Embarked'].fillna(freq_port)

for dataset in combine:

dataset['Embarked'] = dataset['Embarked'].map( {'S': 0, 'C': 1, 'Q': 2} ).astype(int)

# 测试集中Fare有一个缺失值,用中位数进行填补

test_df['Fare'].fillna(test_df['Fare'].dropna().median(), inplace=True)

train_df['FareBand'] = pd.qcut(train_df['Fare'], 4) # 根据样本分位数进行分箱,等频分箱,这步操作同上是为了找出划分线

for dataset in combine:

dataset.loc[ dataset['Fare'] <= 7.91, 'Fare'] = 0

dataset.loc[(dataset['Fare'] > 7.91) & (dataset['Fare'] <= 14.454), 'Fare'] = 1

dataset.loc[(dataset['Fare'] > 14.454) & (dataset['Fare'] <= 31), 'Fare'] = 2

dataset.loc[ dataset['Fare'] > 31, 'Fare'] = 3

dataset['Fare'] = dataset['Fare'].astype(int)

train_df = train_df.drop(['FareBand'], axis=1)

combine = [train_df, test_df]

print(train_df.head(10))

print(test_df.head(10))

# 模型训练

X_train = train_df.drop("Survived", axis=1)

Y_train = train_df["Survived"]

X_test = test_df.drop("PassengerId", axis=1).copy()# 训练要求训练集和测试集特征种类和数量一致

# 逻辑回归模型

logreg = LogisticRegression()

logreg.fit(X_train, Y_train)

Y_pred = logreg.predict(X_test) # logreg.predict_proba(X_test)[:,1]

acc_log = round(logreg.score(X_train, Y_train) * 100, 2)

print("逻辑回归模型准确率: ", acc_log)

# 可视化重要特征

coefficients = logreg.coef_[0]

features = X_train.columns

coef_df = pd.DataFrame({'Feature': features, 'Coefficient': coefficients})

coef_df['Absolute Coefficient'] = coef_df['Coefficient'].abs()

coef_df = coef_df.sort_values(by='Absolute Coefficient', ascending=False)

plt.figure(figsize=(8, 6))

sns.barplot(x='Absolute Coefficient', y='Feature', data=coef_df.head(10))

plt.tight_layout()

plt.show()

# 支持向量机模型

svc = SVC()

svc.fit(X_train, Y_train)

Y_pred = svc.predict(X_test)

acc_svc = round(svc.score(X_train, Y_train) * 100, 2)

print("支持向量机模型准确率: ", acc_svc)

# KNN

knn = KNeighborsClassifier(n_neighbors = 3)

knn.fit(X_train, Y_train)

Y_pred = knn.predict(X_test)

acc_knn = round(knn.score(X_train, Y_train) * 100, 2)

print("KNN模型准确率: ", acc_knn)

# 朴素贝叶斯分类器

gaussian = GaussianNB()

gaussian.fit(X_train, Y_train)

Y_pred = gaussian.predict(X_test)

acc_gaussian = round(gaussian.score(X_train, Y_train) * 100, 2)

print("朴素贝叶斯分类器准确率: ", acc_gaussian)

# 感知机

perceptron = Perceptron()

perceptron.fit(X_train, Y_train)

Y_pred = perceptron.predict(X_test)

acc_perceptron = round(perceptron.score(X_train, Y_train) * 100, 2)

print("感知机模型准确率: ", acc_perceptron)

# 线性SVC

linear_svc = LinearSVC()

linear_svc.fit(X_train, Y_train)

Y_pred = linear_svc.predict(X_test)

acc_linear_svc = round(linear_svc.score(X_train, Y_train) * 100, 2)

print("线性SVC模型准确率: ", acc_linear_svc)

# 决策树

decision_tree = DecisionTreeClassifier()

decision_tree.fit(X_train, Y_train)

Y_pred = decision_tree.predict(X_test)

acc_decision_tree = round(decision_tree.score(X_train, Y_train) * 100, 2)

print("决策树模型准确率: ", acc_decision_tree)

# 随机森林

random_forest = RandomForestClassifier(n_estimators=100)

random_forest.fit(X_train, Y_train)

Y_pred = random_forest.predict(X_test)

random_forest.score(X_train, Y_train)

acc_random_forest = round(random_forest.score(X_train, Y_train) * 100, 2)

print("随机森林模型准确率: ", acc_random_forest)

submission = pd.DataFrame({

"PassengerId": test_df["PassengerId"],

"Survived": Y_pred

})

submission.to_csv('./submission.csv', index=False)

4A评测 - 免责申明

本站提供的一切软件、教程和内容信息仅限用于学习和研究目的。

不得将上述内容用于商业或者非法用途,否则一切后果请用户自负。

本站信息来自网络,版权争议与本站无关。您必须在下载后的24个小时之内,从您的电脑或手机中彻底删除上述内容。

如果您喜欢该程序,请支持正版,购买注册,得到更好的正版服务。如有侵权请邮件与我们联系处理。敬请谅解!

程序来源网络,不确保不包含木马病毒等危险内容,请在确保安全的情况下或使用虚拟机使用。

侵权违规投诉邮箱:4ablog168#gmail.com(#换成@)